NO.PZ2024120401000073

问题如下:

We regress a stock's returns against a market index according to the following OLS model: Return(i) = β0 + β1*Index(i) + u(i). However, our regression is guilty of omitted variable bias. If our regression indeed suffers from omitted variable bias, which of the following is MOST likely true?

选项:

A.

B.

The OLS assumption that [X(i), Y(i)], i = 1, ..., n are iid. random draws is incorrect

C.

The OLS assumption the large outliers are unlikely is incorrect

D.

The assumption of no perfect multicollinearity is incorrect

解释:

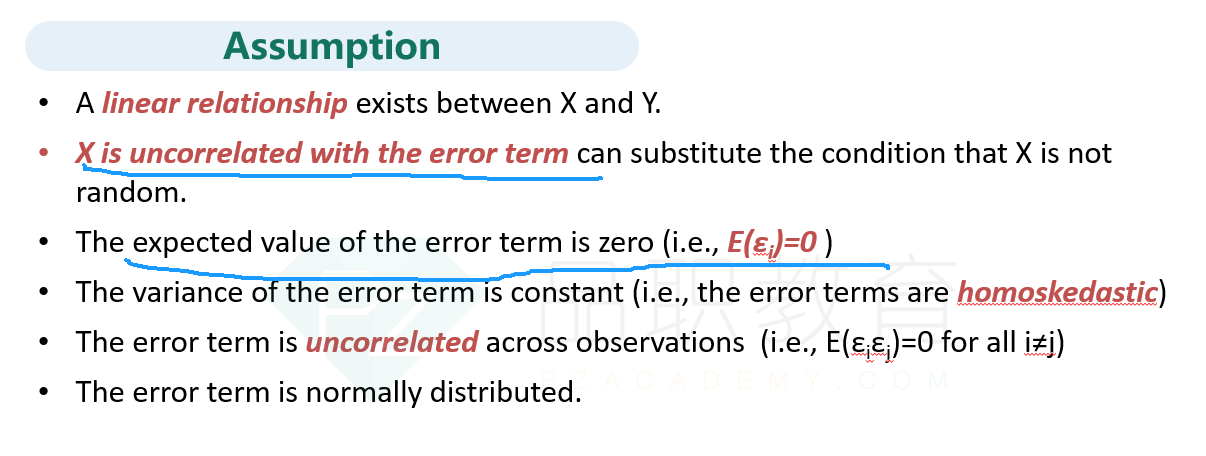

If an omitted variable is a determinant of Y(i), then it is in the error term, and if it is correlated with X(i), then the error term is correlated with X(i).

Because u(i) and X(i) are correlated, the conditional mean of u(i) given X(i) is non-zero. This correlation therefore violates the first least squares assumption, and the consequence is serious: The OLS estimator is biased. This bias does not vanish even in very large samples, and the OLS estimator is inconsistent.

请老师解释一下这道题,完全没看懂,谢谢